Princeton University researchers have used a novel virtual reality and brain imaging system to detect a form of neural activity underlying how the brain forms short-term memories that are used in making decisions.

By following the brain activity of mice as they navigated a virtual reality maze, the researchers found that populations of neurons fire in distinctive sequences when the brain is holding a memory. Previous research centered on the idea that populations of neurons fire together with similar patterns to each other during the memory period.

The study was performed in the laboratory of David Tank, who is Princeton's Henry L. Hillman Professor in Molecular Biology and co-director of the Princeton Neuroscience Institute. Both Tank and Christopher Harvey, who was first author on the paper and a postdoctoral researcher at the time of the experiments, said they were surprised to discover the sequential firing of neurons. The study was published online on March 14 in the journal Nature.

The findings give insight into what happens in the brain during "working memory," which is used when the mind stores information for short periods of time prior to acting on it or integrating it with other information. Working memory is a central component of reasoning, comprehension and learning. Certain brain disorders such as schizophrenia are thought to involve deficits in working memory.

"Studies such as this one are aimed at understanding the basic principles of neural activity during working memory in the normal brain. However, the work may in the future assist researchers in understanding how activity might be altered in brain disorders that involve deficits in working memory," said Tank.

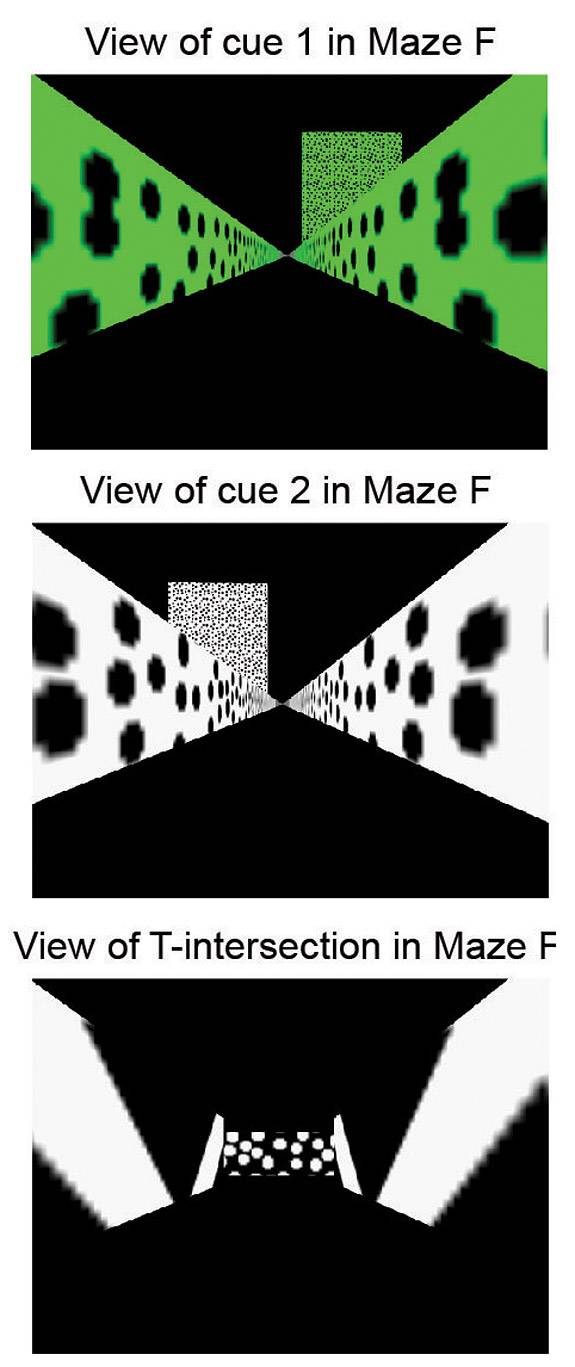

Using a virtual reality maze and brain imaging system, Princeton researchers have detected a form of neural activity the formation of short-term memories used in decision-making. These panels show the view of the virtual reality maze as seen by the mouse. The top panel shows a cue or sign that indicates to the mouse to turn right to receive a water reward. The middle panel shows a cue telling the mouse to turn left. The bottom panel shows the view at the T-intersection of the maze. (Image courtesy of Nature, Christopher Harvey and David Tank)

In the study, the patterns of sequential neuronal firing corresponded to whether the mouse would turn left or right as it navigated a maze in search of a reward. Different patterns corresponded to different decisions made by the mice, the Princeton researchers found.

The sequential neuronal firing patterns spanned the roughly 10-second period that it took for the mouse to form a memory, store it and make a decision about which way to turn. Over this period, distinct subsets of neurons were observed to fire in sequence.

The finding contrasts with many existing models of how the brain stores memories and makes decisions, which are based on the idea that firing activity in a group of neurons remain elevated or reduced during the entire process of observing a signal, storing it in memory and making a decision. In that scenario, memory and decision-making is determined by whole populations of neurons either firing or not firing in the region of the brain involved in navigation and decision-making.

The uniqueness of the left-turn and right-turn sequences meant that the brain imaging experiments essentially allowed the researchers to perform a simple form of "mind reading." By imaging and examining the brain activity early in the mouse's run down the maze, the researchers could identify the neural activity sequence being produced and could reliably predict which way the mouse was going to turn several seconds before the turn actually began.

The sequences of neural activity discovered in the new study take place in a part of the brain called the posterior parietal cortex. Previous studies in monkeys and humans indicate that the posterior parietal cortex is a part of the brain that is important for movement planning, spatial attention and decision-making. The new study is the first to analyze it in the mouse. "We hope that by using the mouse as our model system we will be able to utilize powerful genetic approaches to understand the mechanisms of complex cognitive processes," said Harvey.

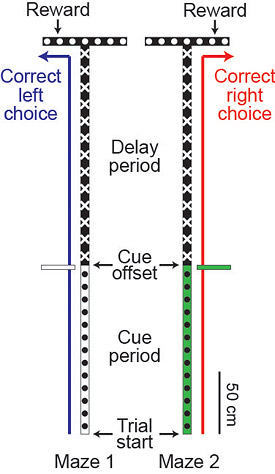

This schematic shows the two virtual reality mazes used in the study. As the mouse ran down the long corridor, it would see a cue on the right or left side of the corridor indicating that it should turn right or left when it gets to the T-intersection. At the "cue offset," the mouse would pass the sign and no longer see it, so the animal must remember which sign it saw until it reaches the T-intersection and makes the turn. (Image courtesy of Nature, Christopher Harvey and David Tank)

Navigating the maze

Princeton researchers studied these neurons firing in the posterior parietal cortex of mice while they navigated a maze in search of a reward. The simple maze, generated using a virtual reality system, consisted of a single long corridor that ended in a T-intersection, requiring the mouse to choose to turn left or right.

As the mouse ran down the long corridor, it saw visual patterns and object signals on the right or left side of the corridor, like a motorist driving down a highway might see a sign indicating which way to turn at the T-intersection. If the mouse turned in the direction indicated by the signal, it found the reward of a drink of water.

The experimental setup required that the mouse notice the signal and remember which side of the corridor the signal was on so that it could make the correct decision when it reached the T-intersection. If it turned the wrong way, the mouse would not find the reward. After several training runs, the mice made the right decision more than 90 percent of the time.

In cases where the mice made errors, the neuronal firing started out with one distinct pattern of sequential firing and then switched over to another pattern. If the mouse saw a signal indicating that it should turn right but made a mistake and turned left, its brain started off with the sequence indicating the visual cues for a future right turn but then switched over to the sequence indicative of a future left turn. "In these cases, we can observe the mouse changing its memory of past events or plans for future actions," said Tank.

The mouse training and imaging experiments were conducted by Harvey, who is now an assistant professor of neurobiology at Harvard Medical School. Harvey was assisted in some experiments by Philip Coen, a graduate student in the Princeton Neuroscience Institute.

Constructing a virtual reality

In place of a physical maze, the researchers created on using virtual reality, an approach that has been under development in the Tank lab for the last several years. The mice walked and ran on the surface of a spherical treadmill while their head remained stationary in space, which is ideal for brain imaging. Computer-generated views of virtual environments were projected onto a wide-angle screen surrounding the treadmill. Motion of the ball produced by the mouse walking and turning was detected by optical sensors on the ball's equator and used to change the visual display to simulate motion through a virtual environment.

To image the brain, the researchers employed an optical microscope that used infrared laser light to look deep below the surface in order to visualize a population of neurons and record their firing.

The neurons imaged in these mice contained a "molecular sensor" that glows green when the neurons fire. The sensor, developed in the lab of Loren Looger, group leader at the Howard Hughes Medical Institute's Janelia Farm Research Campus, consisted of a green fluorescent protein engineered to glow in response to calcium ions, which flood into the neuron when it fires. The green fluorescent protein (GFP) from which the sensor was developed is widely used in biological research and was discovered at Princeton in 1961 by former Princeton researcher Osamu Shimomura, who earned a Nobel Prize in chemistry in 2008 for the discovery.

The virtual reality system, combined with the imaging system and calcium sensor, allowed the researchers to see populations of individual neurons firing in the working brain. "It is like we are opening up a computer and looking inside at all of the signals to figure out how it works," said Tank.

These studies of populations of individual neurons, termed cellular-resolution measurements, are challenging because the brain contains billions of neurons packed tightly together. The instrumentation developed by the Tank lab is one of the few that can record the firing of groups of individual neurons in the brain when a subject is awake. Most studies of brain function in humans involve studying activity in entire regions of the brain using a tool such as magnetic resonance imaging (MRI) that average together the activity of many thousands of neurons.

"The data reveal quite clearly that at least some form of short-term memory is based on a sequence of neurons passing the information from one to the other, a sort of 'neuronal bucket brigade,'" said Christof Koch, a neuroscientist who was not involved in the study. Koch is the chief scientific officer for the Allen Institute for Brain Science in Seattle and the Lois and Victor Troendle Professor of Cognitive and Behavioral Biology at the California Institute of Technology in Pasadena.

The development and application of new technologies for measuring and modeling neural circuit dynamics in the brain is the focus of Princeton's new Bezos Center for Neural Circuit Dynamics. Created with a gift of $15 million from Princeton alumnus Jeff Bezos, the founder and chief executive officer of Amazon.com, and alumna MacKenzie Bezos, the center supports the study of how neural dynamics represent and process information that determines behavior.

This work was supported by the National Institutes of Health, including a National Institutes of Health Challenge Grant, part of the American Recovery and Reinvestment Act of 2009. Harvey was supported by the Helen Hay Whitney Foundation and a Burroughs Wellcome Fund Career Award at the Scientific Interface.