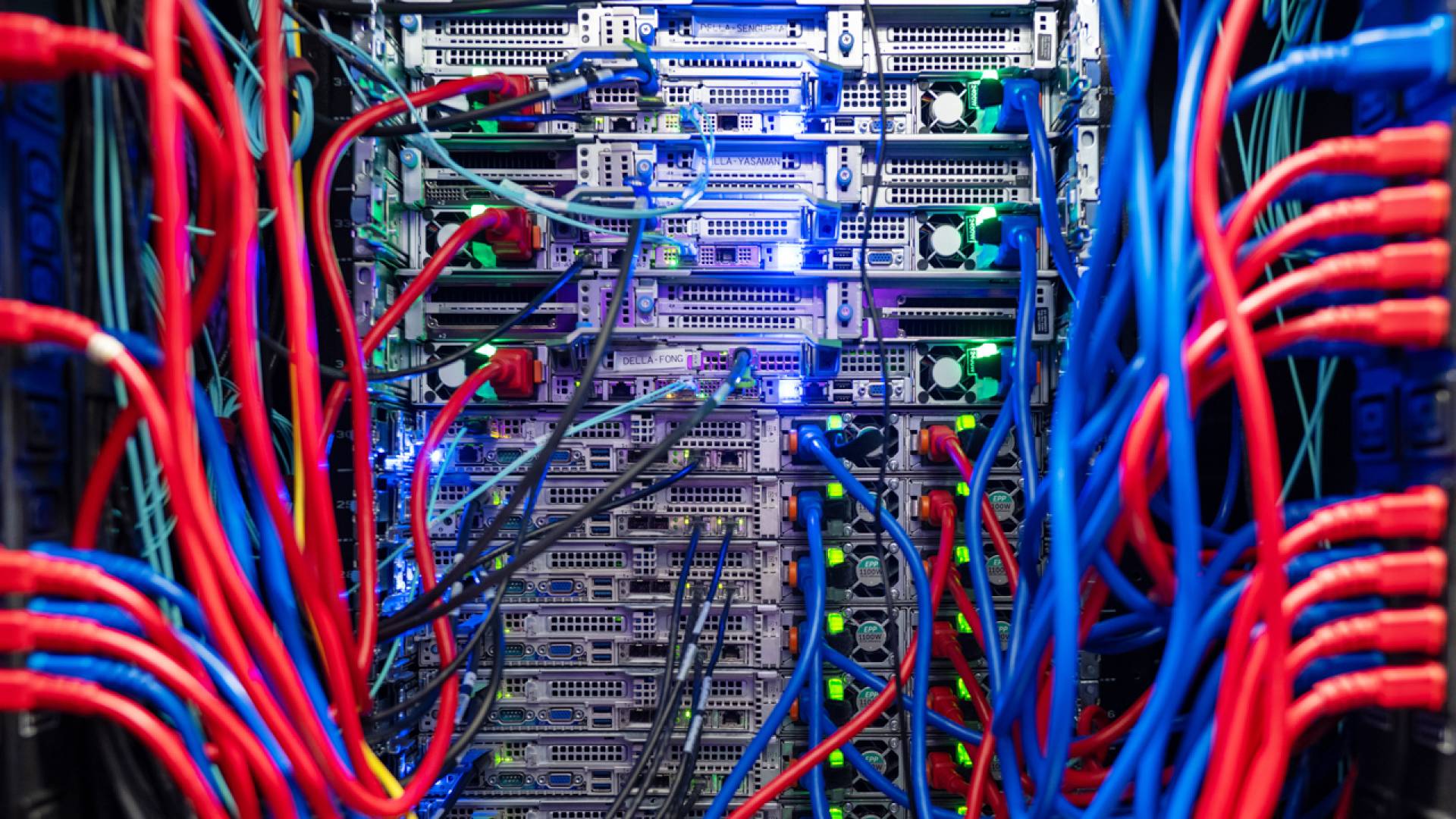

Princeton University’s investments to seed new AI initiatives and support AI research across campus include faculty research grants, a computational infrastructure with 300 Nvidia H100 GPUs, data storage infrastructure with the support of Princeton Research Computing, and funding for research staff, including postdocs, research software engineers and data scientists.

At Princeton, interdisciplinary collaborations of researchers are using artificial intelligence to accelerate discovery across the University in fields ranging from neuroscience to Near Eastern studies.

Princeton experts are also pushing the limits of AI technology to make it more accurate and efficient, to understand how AI’s uncanny large language models work, and to address the technology’s ethical, fairness and policy implications.

And they are doing it all at a university small enough to allow for easy collaboration across disciplines but big enough to have the field-leading researchers, computing resources and institutional commitment needed to help shape the fast-developing field.

Princeton University Provost Jennifer Rexford

As the technology races forward, Princeton has developed a series of major research initiatives to move at the speed of AI discovery. “Given the rapid advances in AI and its applications, any static framing of research in the field will quickly become out-of-date,” said University Provost Jennifer Rexford, a computer scientist.

“Instead of drawing permanent lines in the sand or waiting until the dust settles, Princeton is remaining nimble by launching a set of high-intensity research projects led by groups of interdisciplinary faculty, without the logistical barriers that have traditionally slowed universities down,” Rexford said.

At the same time, said Andrea Goldsmith, dean of Princeton’s School of Engineering and Applied Science, every facet of the University’s AI enterprise — from the foundational computer science to the development of ethical and policy guardrails — reflects a commitment to human values in the spirit of Princeton’s informal motto: “in the nation’s service and the service of humanity.”

“Princeton can be uniquely impactful in the very rapidly accelerating AI space because we think about research in AI and applications of AI through the lens of serving humanity,” Goldsmith said.

Inquiry at the speed of AI discovery

The nimble, high-intensity initiatives push the boundaries of multiple fields at once, with significant funding from the University endowment.

- Princeton Language and Intelligence (PLI) focuses on unpacking the black box large language models like ChatGPT and GPT-4, and creating smaller, more targeted language models.

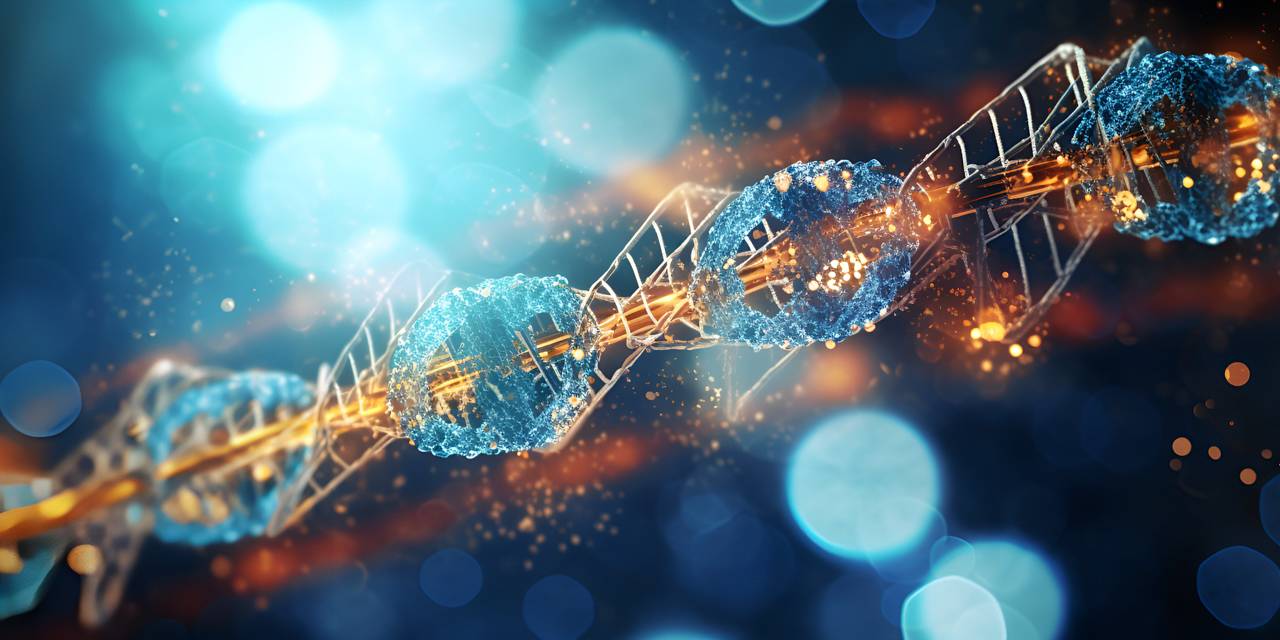

- Princeton Precision Health (PPH) uses data from the genomic to the socioeconomic and the environmental to the clinical to improve health policy and patient health outcomes.

- AI for Accelerating Invention (AI2) will tackle engineering challenges like containing plasma in research on fusion, and revolutionizing material design and structure.

- Natural and Artificial Minds (NAM) will focus on how our understanding of human intelligence supports developments in artificial intelligence, and vice versa.

Princeton Precision Health, one of the University's nimble, high-intensity AI initiatives, uses data from the genomic to the socioeconomic and the environmental to the clinical to improve health policy and patient health outcomes.

The University’s investments to seed the new initiatives and support AI research across campus include faculty research grants, a state-of-the-art computational infrastructure with 300 Nvidia H100 GPUs, data storage infrastructure with the support of Princeton Research Computing, and funding for research staff, including postdocs, research software engineers and data scientists.

Sanjeev Arora, Princeton’s Charles C. Fitzmorris Professor in Computer Science and director of the Princeton Language and Intelligence initiative

Princeton Language and Intelligence, which launched in September 2023 under the direction of Sanjeev Arora, draws on Princeton’s leadership in large language models (LLMs), including Karthik Narasimhan, co-inventor of GPT; Danqi Chen, co-developer of RoBERTa and a range of language models; and Arora, who is leading efforts to understand and explain the mathematical underpinnings of LLMs.

With the new Nvidia cluster, the team will be able to study at scale how LLMs work, with the promise for a new, open-source approach that keeps the technology in the public sphere and academic researchers at the forefront of progress. “It’s important to have all of that expertise out in the open,” said Arora, who is Princeton’s Charles C. Fitzmorris Professor in Computer Science. “As disinterested parties, universities can help society and government understand and manage AI.”

Princeton Precision Health, which launched in May 2022, aims to transform human health at all levels by developing and applying AI models that integrate data across domains, including genomics, and social and environmental determinants of health. The goal is to improve both clinical practice and health policy for kidney disease, diabetes, immune system disorders, cardiovascular health, neurodevelopment and neurodegeneration, and neuropsychiatric diseases. The initiative will also address the impact of technology use on cognition and mental health.

Olga Troyanskaya, Professor of Computer Science and the Lewis-Sigler Institute for Integrative Genomics and director of Princeton Precision Health

Olga Troyanskaya, the professor of computer science and genomics who leads the initiative, says the team’s computer scientists are developing “purpose-built, domain-specific models” that draw on deep subject-matter expertise from the biological sciences and medicine, informed by PPH’s psychologists, policy experts and ethicists.

Troyanskaya said the University’s seed funding and its un-siloed, interdisciplinary approach are essential to building trustworthy AI tools to improve human health. The inspiration alone is not enough, she said. “You need a framework: people infrastructure, budget infrastructure, data infrastructure, computational infrastructure,” she said. “AI research depends heavily on all of those, and none of them are easy or cheap.”

AI for Accelerating Invention, launching soon, will focus on the potential of artificial intelligence and machine learning tools to transform engineering design and fabrication. Participating Princeton researchers include Ryan Adams and Mengdi (Mandy) Wang. In one project, a collaboration with the Omenn-Darling Bioengineering Institute, researchers are using AI to engineer RNA and biomaterials for novel therapeutics. Other applications include new materials discovery, semiconductor chip design, robots that adapt to their environments or tasks, and greener architectural design and practice.

Wang, an associate professor in the Department of Electrical and Computer Engineering and the Center for Statistics and Machine Learning and a former senior visiting research scientist with Google DeepMind, studies the methodology of generative AI, reinforcement learning and machine learning theory and how it can accelerate scientific discoveries in healthcare, biotech, drug discovery and intelligent systems. Adams, a professor of computer science and the associate chair of the Department of Computer Science, is accelerating design and discovery by improving the underlying tools that make machine learning possible.

Natural and Artificial Minds, also launching soon, will focus on the connections between research in human and machine intelligence — and how they can inform each other. Princeton researchers exploring those connections include Tom Griffiths, who directs Princeton’s Center for Statistics and Machine Learning (CSML) and is Princeton’s Henry R. Luce Professor of Information Technology, Consciousness, and Culture of Psychology and Computer Science; Sarah-Jane Leslie, the Class of 1943 Professor of Philosophy and founding director of the Program in Cognitive Science; Jonathan Cohen, the Robert Bendheim and Lynn Bendheim Thoman Professor in Neuroscience and a professor of psychology and neuroscience; and Tania Lombrozo, the Arthur W. Marks ’19 Professor of Psychology and director of the Program in Cognitive Science.

In his research, Griffiths is using ideas from AI to understand the principles that underlie how humans solve problems in everyday life. Leslie is building mathematical models that describe human cognition and testing those computations against human performance. Cohen applies what science knows about human learning and its basis in brain function to help machine learning become more efficient, accurate and understandable. Lombrozo’s work addresses fundamental questions about learning, reasoning and decision-making using the tools of experimental psychology and analytic philosophy.

Core strengths in AI

Within the initiatives and across the entire campus, Princeton has core strengths in three leading areas of AI research: advancing the capabilities themselves, using them for discovery and applications between and across disciplines, and grappling with the societal implications of AI.

“All of those three are tightly integrated, we’re strong in all of those areas, and they all benefit from each other,” said Arvind Narayanan, who directs Princeton’s Center for Information Technology Policy (CITP), a collaboration between the University’s engineering and public policy schools.

When it comes to pushing the limits of the technology, Princeton’s current AI researchers draw on generations of computing experts who have shaped the technical and theoretical underpinnings of AI and its applications, said Elad Hazan, professor of computer science.

“We’ve always been at the forefront of computer science and AI, starting from [Alan] Turing, the founder of modern computer science, who did his Ph.D. here. He wrote the first paper proposing the question, ‘Can machines be intelligent?’ And now we are devising more and more interesting answers to this question he asked so long ago,” Hazan said.

Princeton’s recent multimillion-dollar investment in the Nvidia GPU cluster opens extraordinary opportunities for University researchers to develop a new paradigm for large language models beyond today’s two extremes: closed, proprietary models “about which we do not know any details,” as Arora said, and fully open-source models “about which we know all the details, but frankly they aren’t very good.”

“It is here in the open space that Princeton will lead,” Arora said, with open-source language models that support academic inquiry at America’s research universities and engender public trust.

Many Princeton computer engineers and scientists are focused on designing smaller models that are both less expensive and more energy efficient. “If we’re going to make this technology more pervasive, and more importantly, not have it locked up by a small number of companies, academic research on how to make smaller models is itself an important area of study,” said Rexford.

Accelerating interdisciplinary discovery

“Much of the impact of AI has been as it comes into contact with other disciplines and other kinds of problems in the world,” said Griffiths.

At Princeton, scholarly excellence is distributed across disciplines, and the small, intimate campus brings together serious thinkers to apply artificial intelligence to the world’s great challenges and to align the technology with human values. “Interdisciplinary really is the magic word,” said Olga Russakovsky, an associate professor of computer science who researches computer vision and how to encode protections against bias.

In the natural sciences, humanities and social sciences, teams of experts are applying AI to new and old problems. Geneticists are partnering with linguists to decode DNA. Social scientists are teasing out fine-tuned economic disparities from census data.

Adji Bousso Dieng, assistant professor of computer science and director of the Vertaix lab, is working at the intersection of AI and the natural sciences to solve a wide range of scientific problems, including predicting COVID variants, discovering novel materials, and accelerating molecular simulations.

“AI is really accelerating the sciences,” Dieng said “This is not a prediction for the future. Right now we are seeing this acceleration happen in materials science, in biology, and it is going to happen in other fields as well.”

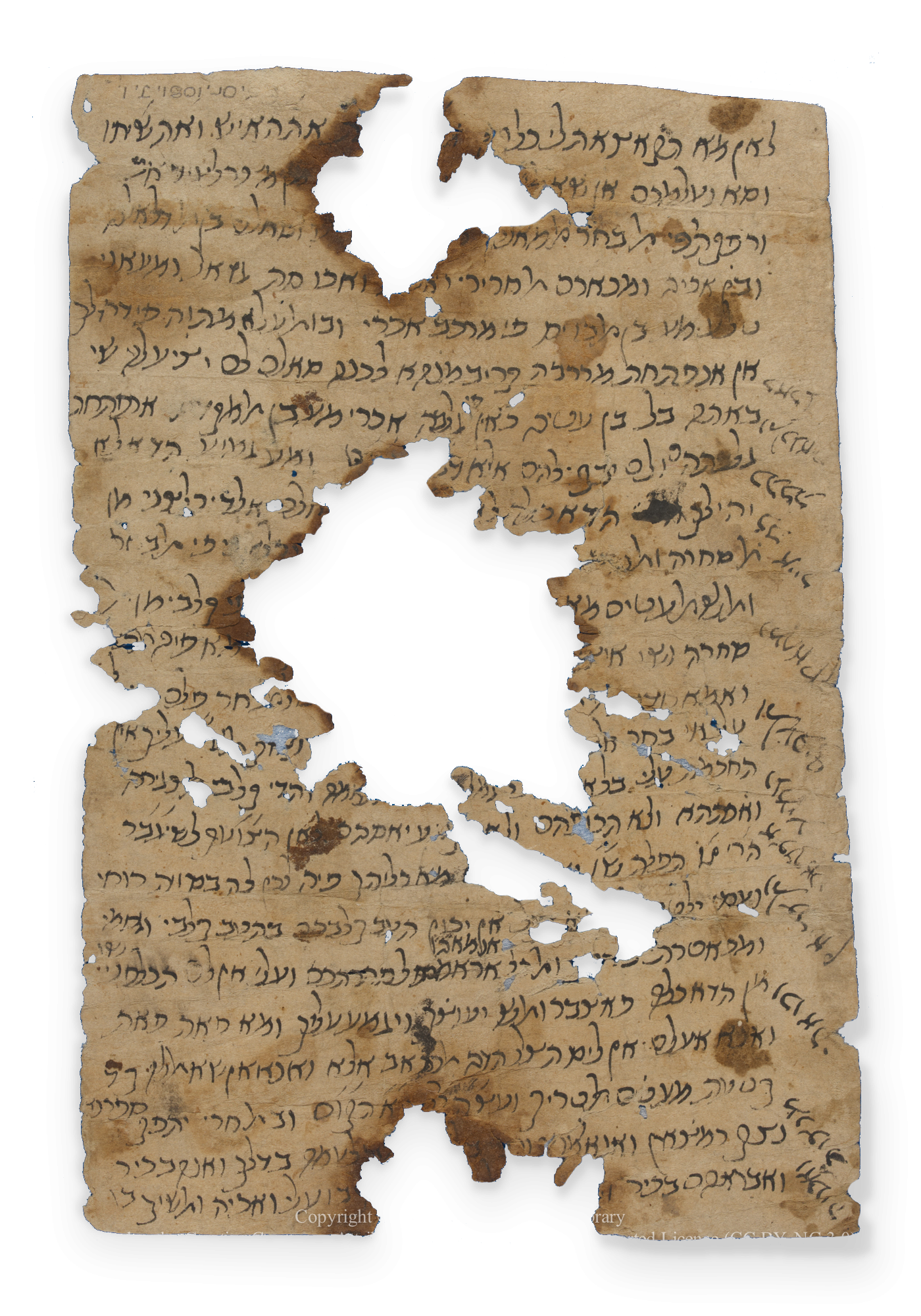

Scholars of ancient languages and cultures are turning to large language models to help decipher ancient texts. “Developments in AI are transforming how we reconstruct the records of antiquity,” said Barbara Graziosi, chair of the Department of Classics and the Ewing Professor of Greek Language and Literature. She hinted that upcoming papers, drawing from her AI-assisted work, would reveal new sides of several ancient authors. “People think that we know what Aristotle said. They’re going to be surprised!”

AI is helping scholars decipher and reconstruct irreplaceable artifacts. Marina Rustow, Princeton’s Khedouri A. Zilkha Professor of Jewish Civilization in the Near East, directs the Princeton Geniza Lab and is leading a project using neural networks to produce searchable transcriptions of thousands of documents from a medieval synagogue in Cairo. This manuscript is the last letter to Jewish philosopher Moses Maimonides from his brother David, written in 1170.

Prominent AI scholars at Princeton also include engineers, sociologists, philosophers, economists, literary critics, historians, psychologists and cognitive psychologists, physicists, political scientists, neuroscientists, biologists, astrophysicists, musicologists, chemists, geoscientists, and experts in African American culture.

“One of the reasons why Princeton is a great place to do this kind of work is because there’s great people in every field,” said Matthew Salganik, a sociologist and computational social scientist focused on the limits of predictive AI. “No matter what kind of collaborator you might be looking for, there is one of those here.”

Princeton’s many centers for interdisciplinary data science include the Center for Digital Humanities (CDH), directed by Meredith Martin, the Data-Driven Social Science Initiative (DDSS), directed by Rocío Titiunik, and CITP in public policy, directed by Narayanan.

“The problems are so big and so complicated; it’s a real strength that Princeton has so many different perspectives,” said Salganik, who headed CITP from 2019 to 2022. “The other thing that sets Princeton apart is long-term thinking. What will policymakers struggle with in the future? And how can we prepare now for those moments?”

‘In the service of humanity’

Princeton’s informal motto, “in the nation’s service and the service of humanity,” shapes the way Princeton researchers are tackling the social implications of artificial intelligence, from the economic impacts of jobs being taken over by GPT models to the risks posed by models trained on biased data that therefore encode bias.

“The discipline of computer science, for the most part, is oriented around questions that help industry make computing technology work better, faster, cheaper, and there’s nothing wrong with that,” Narayanan said. “But I think there needs to be a subset of computer science whose purpose is to hold power to account.”

Ruha Benjamin and Jonathan Mummolo are among the University scholars wrestling with the social implications of AI. Mummolo, an associate professor of politics and public affairs, is studying how computer vision models can be used to better understand police-civilian interactions and improve oversight. Benjamin, Princeton’s Alexander Stewart 1886 Professor of African American Studies and director of the IDA B. WELLS Just Data Lab, confronts the ways that AI reflects and reproduces inequities and injustices.

Hazan focuses on aligning artificial intelligence with human values. “In the context of modern large language models, how do we prevent the language models from helping bad actors?” he asked. “How can we prevent them from spreading disinformation, subverting democracy, and so on? How do we detect power-seeking behavior?”

Russakovsky’s team is designing computer vision systems to resist racial and sexist biases, among others. Russakovsky also co-founded AI4ALL, which introduces AI to high school and college students who have diverse perspectives, voices and experiences, with the goal of unlocking AI’s potential to benefit all of humanity.

“AI is not just autonomous cars and killer robots or whatever. It’s a tool, and there’s so much good that can be done with it,” she said. “Anybody who has passion and wants to change the world can find a place for themselves in AI and can leverage AI to help them with that.”

Driving engagement

Located between New York and Washington, the central New Jersey region has exceptional strengths in higher education, healthcare, finance, sustainable energy and technology. As part of that intellectual ecosystem, Princeton maintains strong partnerships with nearby industry, government and academic institutions. These vital relationships allow the University to translate research into solutions that realize the promise of AI and address its societal implications.

The University has strong collaborations with the Princeton Innovation Center Biolabs, Google DeepMind Princeton, the NSF I-Corps Hub: Northeast Region, the AI Policy and Governance Working Group at the Institute for Advanced Study, and the Rutgers Cancer Institute of New Jersey, many of which were founded in whole or part by members of the Princeton faculty.

“We are committed to supporting the local AI ecosystem with workforce development, innovation and entrepreneurship, contributing to a neighborhood that benefits the region while amplifying the work of our faculty,” said Rexford.

To that end, the University is hosting the inaugural convening event for a planned artificial intelligence hub for the State of New Jersey, in collaboration with the state and the New Jersey Economic Development Authority.

The invitation-only event on April 11 will welcome partners from across the region and beyond to discuss AI applications in health, finance, sustainable energy and technology, as well as the implications of AI for society. Brad Smith, vice chair and president of Microsoft Corporation, is slated to deliver the keynote address. New Jersey Gov. Phil Murphy and Princeton President Christopher L. Eisgruber will share remarks.

Hazan, who heads Google DeepMind Princeton, cited that initiative’s presence here as an indicator of Princeton’s capacity to drive innovation. “Google doesn’t have labs all over the world or at every university,” he said. “The strength of the University, the faculty and students, the commitment of the University to invest in these areas and purchase computing power and invest in the area in terms of faculty lines and in terms of equipment — all of that together makes it very compelling for people to research AI at Princeton.”

In December 2023, University and state officials announced plans for a new AI hub for New Jersey. Appearing at the event were (from left): New Jersey Economic Development Authority CEO Tim Sullivan; Princeton University Provost Jennifer Rexford; Princeton University President Christopher L. Eisgruber; New Jersey Gov. Phil Murphy; and Beth Noveck, New Jersey State Chief Artificial Intelligence Strategist.