Investigating Real-Life Communication Using fNIRS

Our current understanding of the neural underpinnings of human communication is primarily limited to the processing of isolated components of language by individual subjects lying flat in an fMRI scanner. Using functional near-infrared spectroscopy (fNIRS), I am beginning to investigate communication during live, naturalistic interactions between multiple people. In particular, I am interested in how neural coupling, or synchrony, between children and their caregivers underlies successful language learning and social development early in life.

Measuring speaker-listener neural coupling with functional near infrared spectroscopy.

Liu, Piazza, Simony, Shewokis, Onaral, Hasson, & Ayaz. Scientific Reports, 2017.

Press:

WIRED |

Huffington Post |

ScienceAlert |

Schmidt Transformative Technology Award press release

Summary Statistics in Auditory Perception and Speech Production

I am interested in how humans use summary statistics to efficiently perceive complex sounds. In one study, we found that listeners represent the average pitch of an auditory sequence while failing to represent information about the individual tones in the sequence. Thus, our ability to process local detail seems to be supplanted by a more optimal “gist” representation.

In more recent work, we have shown that caregivers shift the summary statistics of their speech timbre (i.e., their unique vocal fingerprint) when speaking to infants, in a way that generalizes robustly across a variety of diverse languages.

Humans use summary statistics to perceive auditory sequences.

Piazza, Sweeny, Wessel, Silver, & Whitney. Psychological Science, 2013.

Press:

UC Berkeley press release |

Science Today interview

Mothers consistently shift their unique vocal fingerprints when communicating with infants.

Piazza, Iordan, & Lew-Williams. Current Biology, 2017.

Press:

PBS |

Science Friday |

Washington Post |

Discover Magazine |

The Guardian |

BBC |

Princeton News

Perception of Musical Pitch and Timbre

I am also broadly interested in the perception of pitch and timbre in music and other naturalistic auditory environments. In one ongoing study, we find that timbre may represent a high-level, configural property of natural sounds, processed similarly to faces in vision.

(Photo of my chamber trio in 2013).

Resolving Ambiguity in Visual Perception

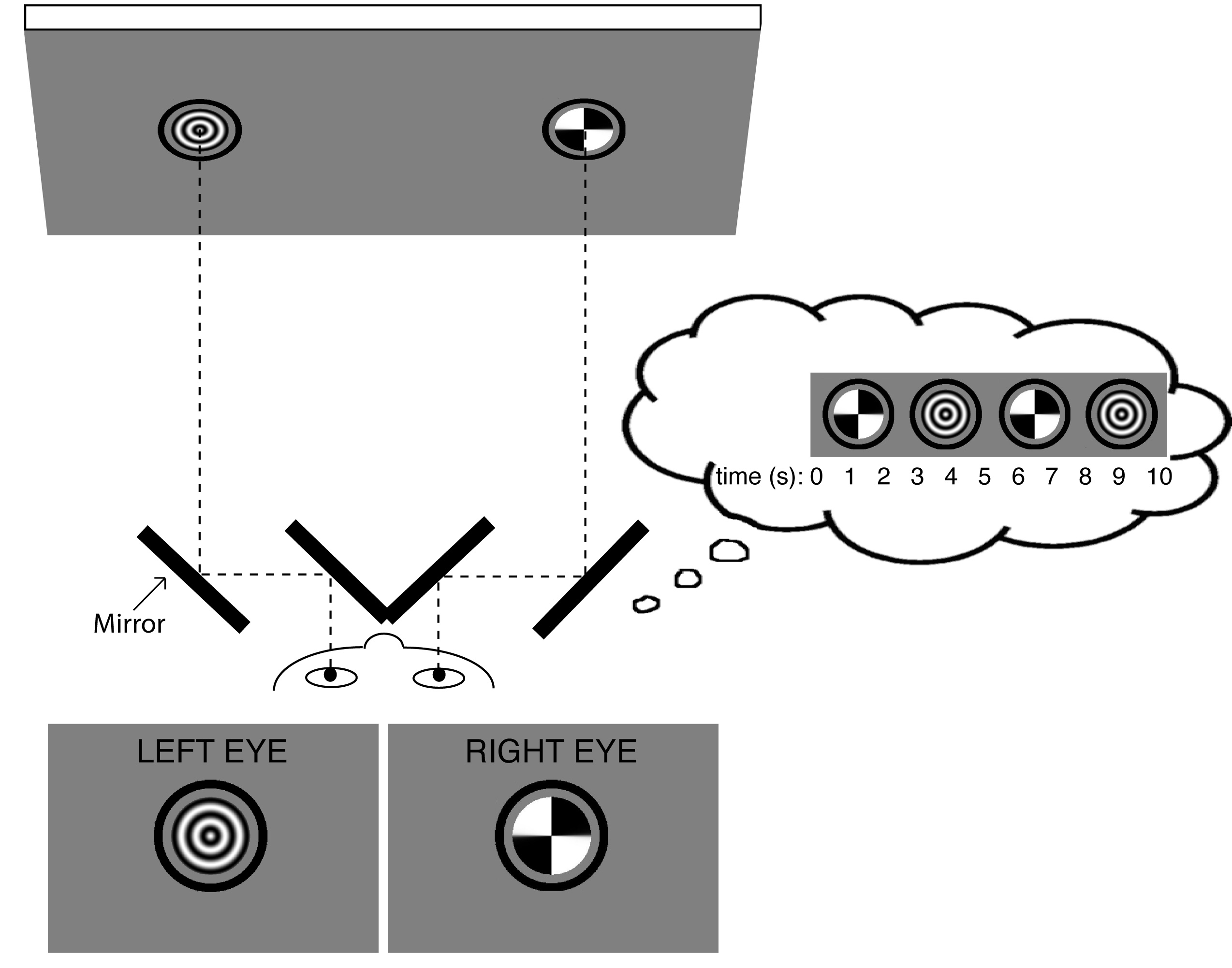

How does the brain select what we consciously perceive when the world is ambiguous? In my dissertation, I used binocular rivalry (a bistable phenomenon that occurs when two conflicting images are presented separately to the two eyes, resulting in perceptual alternation between the images) as a model of visual ambiguity to study the effects of various factors on conscious awareness.

In one study, we found that asymmetry between the two cerebral hemispheres impacts our conscious perception during binocular rivalry. Our results indicate that conscious representations differ across the visual field and that these differences persist for a long time (at least 30 seconds) after the onset of a stimulus. In a follow-up study, we found that this hemispheric filtering is based on a relative comparison of the SFs available in the current environment.

In another set of studies, we investigated the impact of predictive information on perception. First, we showed that people are more likely to perceive a given image during binocular rivalry when that image matches the prediction of a recently-viewed stream of rotating gratings. More recently, we have found that arbitrary associations between sounds and images (established during a brief, passive statistical learning period) enable sounds to impact what we see during rivalry.

Persistent hemispheric differences in the perceptual selection of spatial frequencies.

Piazza, & Silver. Journal of Cognitive Neuroscience, 2014.

Relative spatial frequency processing drives hemispheric asymmetry in conscious awareness.

Piazza, & Silver. Frontiers in Psychology, 2017.

Predictive context influences perceptual selection during binocular rivalry.

Denison, Piazza, & Silver. Frontiers in Human Neuroscience, 2011.

Rapid cross-modal statistical learning influences visual perceptual selection.

Piazza, Denison, & Silver. Journal of Vision, 2018.