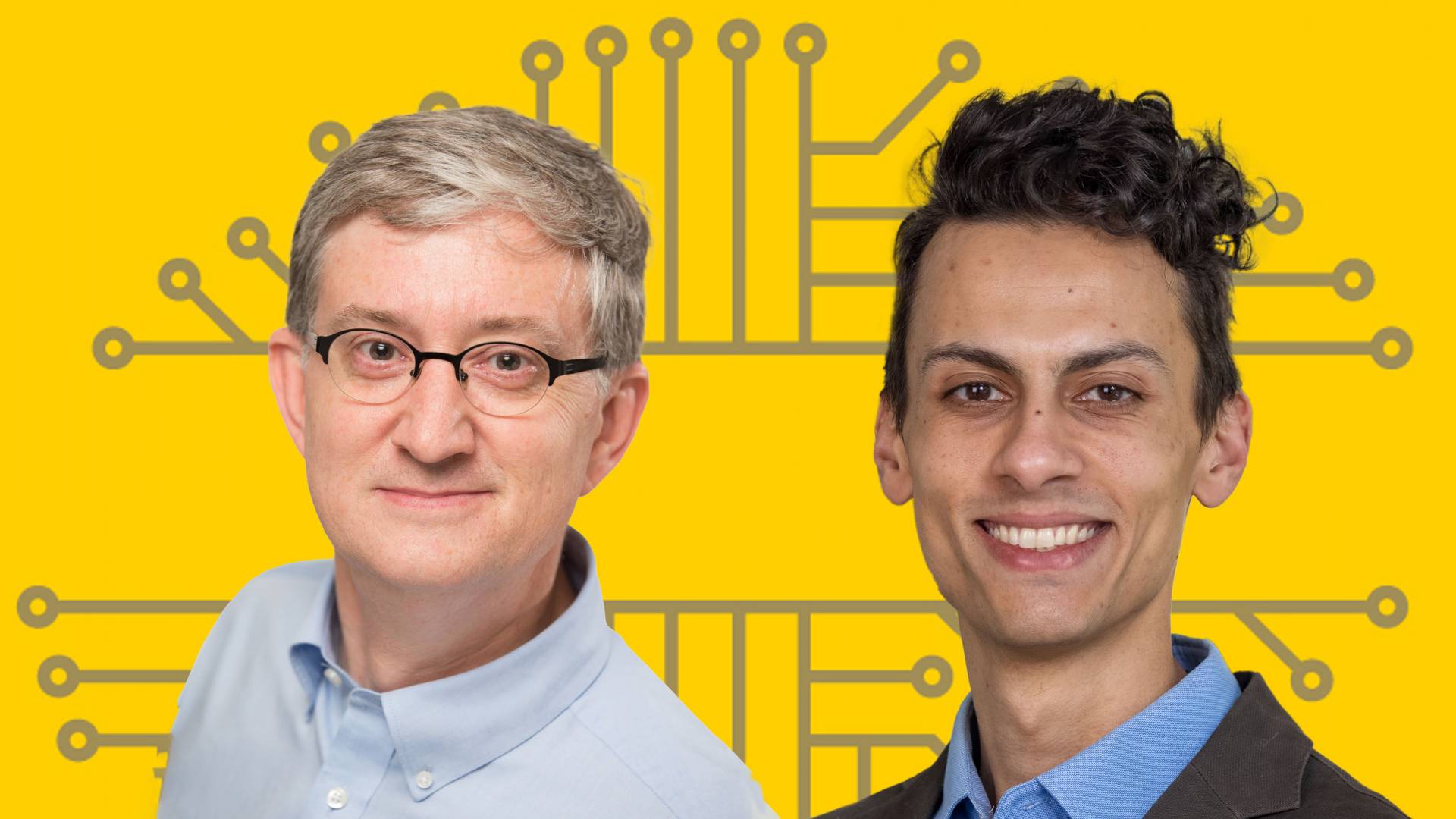

Princeton’s Ed Felten and WHYY’s Malcolm Burnley.

In the fourth episode of A.I. Nation, a new podcast by Princeton University and Philadelphia public radio station WHYY, computer science professor Ed Felten and WHYY reporter Malcolm Burnley investigate the ways that AI can be biased, how these biases can harm people of color, and what we can do to make the technology more accurate and equitable.

“The computer’s not going to lie,” are the words Nijeer Parks heard from police officers while being arrested for shoplifting. As it turns out, Parks was arrested for a crime he never committed in a town he had never been to because of faulty facial recognition software.

Despite the advances in facial recognition technology — the same technology that allows us to unlock our smartphones seamlessly — it can be incorrect more often than we might think, particularly for Asian Americans and African Americans.

“There is this tendency to trust something because it's high-tech, but a lot of times, it's a complicated situation,” says Felten. “Everything that's made by people, if we don't take care to keep bias out of it, bias will get into it, and the results can be unfair."

Burnley explains further, “If engineers provide the algorithm with a data set to learn from... and most of the photos are of white people, the algorithm will be worse at identifying people of color.”

Felten and Burnley discuss why predictive policing can be problematic, particularly in the “feedback loop” that is created between skewed data and the police. As Felten explains, “The data that the police have reflects where they've been, and so the risk is they will decide that they should go back to the places they've been before, and maybe even that they should go back there even more intensively than they did before…”

In this feedback loop, the data can suggest that there is more petty crime happening in that specific location, when in reality, it’s more likely that the petty crime is being detected because the location is overpoliced.

While there can be many problems with predictive policing, Felten and Burnley discuss with several guests the ways that the technology can be made more equitable. One of their guests, for example, attempted to design a crime-forecasting tool without using arrest data.

In addition to some of the solutions Felten and Burnley’s guests offer, Felten also suggests that we can use machine learning to better understand and address issues like bias in policing. For example, data shows us that officers who work too many shifts or who have recently responded to traumatic situations are more prone to overreact in highly emotional situations. Might we use this data to schedule officer shifts in a way that gives an officer who is pushed to the edge some extra time?

As Burnley points out, many people’s questions about AI in policing involve how the police may use AI against them or to surveil them, but the hope is that police departments could also use AI for increased accountability or to improve internally.

In “Echo Chambers,” the final episode of “A.I. Nation” that will be released next week, Felten and Burnley will discuss the AI that’s in all of our pockets and explore how social media companies’ algorithms have changed the fabric of our country by fueling polarization.

The podcast, which launched on April 1, has been featured as “New & Noteworthy” on Apple Podcasts and as a “Fresh Find” on Spotify. A.I. Nation is available for download wherever you get your podcasts.