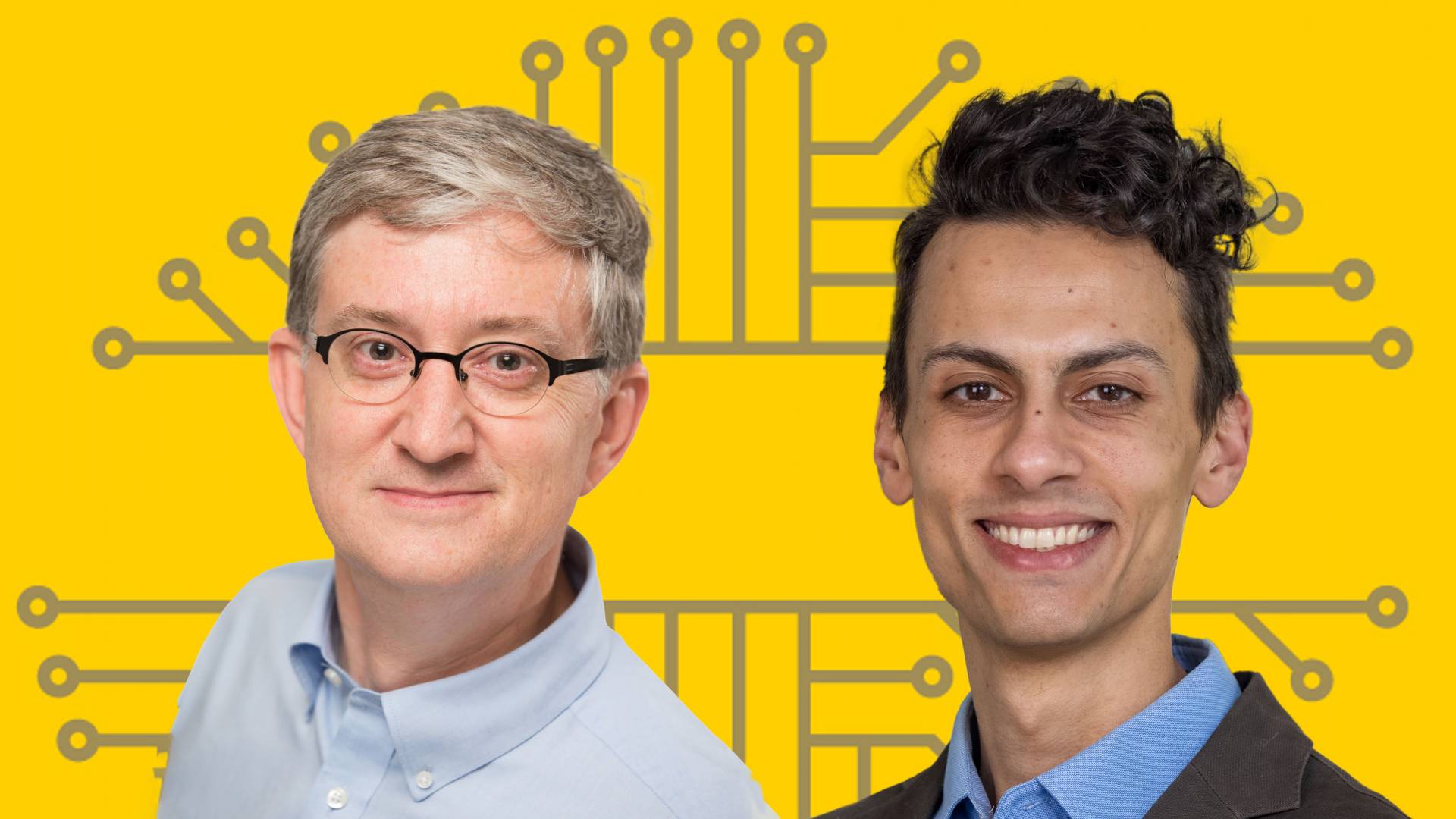

Princeton’s Ed Felten and WHYY’s Malcolm Burnley.

In the fifth and final episode of “A.I. Nation,” a podcast by Princeton University and Philadelphia public radio station WHYY, computer science professor Ed Felten and WHYY reporter Malcolm Burnley investigate the role of AI in social media and the polarizing effects these algorithms have on us, whether we realize it or not.

As Felten and Burnley point out, social media is a great example of how prevalent AI is in our lives today. "We might think of social media as talking to our friends or reading what people we know or famous people say, but really that experience is shaped in a profound way by the AI systems that decide what we're going to see," says Felten.

In the episode, Felten and Burnley look at Facebook’s “Groups” feature, which the company has been marketing heavily in recent years. The downside to groups, however, is that they are particularly vulnerable to misinformation and disinformation. “People are more likely to trust content in a group because they feel like it’s a safe space for them… people’s guards are down,” says podcast guest Nina Jankowicz, a disinformation fellow at the Wilson Center, a Washington D.C.-based think tank.

And the problem with Facebook’s Groups algorithm, which recommends other groups users might be interested in based on the groups they are currently involved in, is that one group can act as a gateway to another, pulling users into a “radical rabbit-hole.”

This phenomenon may fuel jarring, real-life consequences, such as the January 6 riot at the U.S. Capitol, which was instigated in part by members of over 60 active “Stop the Steal” groups that formed after the 2020 election and called for violence in the weeks before the insurrection.

But what can Facebook and other social media companies do? It would be impossible for employees to moderate the content manually, and while algorithms are a useful tool, they are not foolproof.

Felten and Burnley speak with guests who offer tangible solutions that social media companies could take to prevent, or at least cut down on, their platforms being used to spread misinformation.

"The idea is we will get smarter, we will get better at this, and over time, we will learn how to deal with the downsides of this technology, and we'll learn how to accentuate the positive aspects of it. So that a century from now, AI will be built into everyday life and people will have benefits that we might not be able to imagine now,” says Felten. “It might be a bumpy road to get there, but I think in the long run the positives do outweigh the negatives."

And what can we, as citizens and consumers, do in the meantime, while we wait for the kinks to get worked out?

Felten encourages us to think about AI as a mirror that reflects us back to ourselves; in other words, while we might think that AI is teaching us, we are also teaching it how to operate.

“What we do and say on social media, how we interact with these systems, that steers how they interact with us back. So if we act like we would like people to act, then the hope is that that positive view of ourselves would get reflected back to us,” says Felten.

He adds: “Be mindful that these systems will adapt to what you do; they are learning from you all the time. And so think about what you're teaching them.”

The podcast, which launched on April 1, has been featured as “New & Noteworthy” on Apple Podcasts and as a “Fresh Find” on Spotify. A.I. Nation is available for download wherever you get your podcasts.